Formal Methods for V&V and T&E of Autonomous Systems

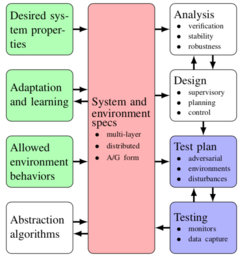

The goal of this project to advance the mathematical and algorithmic foundations of test and evaluation by com- bining advances in formal methods for specification and verification of reactive, distributed systems with algorithmic design of multi-agent test scenarios, and algorithmic evaluation of test results. Building on previous results in synthesis of formal contracts for performance of agents and subsystems, development of barrier certificate methods for provably safe performance of nonlinear control systems, and experience in the development of operational autonomous systems we are creating a mathematical framework for specifying the desired characteristics of multi-agent systems involving cooperative, adversarial, and adaptive interactions, develop algorithms for verification and validation (V&V) as well as test and evaluation (T&E) of the specifications, and per- form proof-of-concept demonstrations that demonstrate the use of formal methods for V&V and T&E of autonomous systems. If successful, our results will provide more systematic methods for describing the desired properties of military systems in complex environments; new algorithms for verification of system-level designs against those properties, synthesis of test plans, and analysis of test results; de- sign rules that allow adaptation and machine learning to be integrated without compromising system safety and performance specifications; and demonstration of the envisioned techniques in a multi-agent, free-flight arena that will provide experimental validation of the results.

Project participants:

|

Collaborators:

|

Objectives

The output of the project will be a set of tools (theory and algorithms) that address these challenges by demonstrating the use of formal techniques for specification, design, verification, and testing of autonomous systems operating in heterogeneous, multi-agent, adversarial environments. More specifically, we aim to accomplish the following specific objectives:

- Create a mathematical framework for specification of desired characteristics of multi-agent autonomous and semi-autonomous systems that account for cooperative, adversarial, and adaptive interactions between agents.

- Develop algorithms and software toolboxes for creation of layered models that are suitable for al- gorithmic verification of performance that incorporate existing test data as well as generate test plans and performance monitors for using in operational test and evaluation.

- Demonstrate key concepts on a proof-of-concept experimental testbed that demonstrates the integration of test data with formal methods for specification and verification of autonomous and semi-autonomous system performance.

References

- Evaluation Metrics of Object Detection for Quantitative System-Level Analysis of Autonomous Systems. Apurva Badithela, Tichakorn Wongpiromsarn, and Richard M. Murray. To appear, 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS).

- Reasoning over Test Specifications using Assume-Guarantee Contracts. Apurva Badithela, Josefine B. Graebener, Inigo Incer, and Richard M. Murray. Submitted, NASA Formal Methods (NFM), 2023.

- Barrier-Based Test Synthesis for Safety-Critical Systems Subject to Timed Reach-Avoid Specifications. Prithvi Akella, Mohamadreza Ahmadi, Richard M. Murray, Aaron D. Ames. Submitted, IEEE Transactions on Automatic Control, 2023.

- Minimally Constrained Testing for Autonomy with Temporal Logic Specifications. Apurva Badithela, Josefine Graebener, Richard M. Murray. RSS 2022 Workshop on Envisioning an Infrastructure for Multi-Robot and Collaborative Autonomy Testing and Evaluation.

- Synthesizing Reactive Test Environments for Autonomous Systems: Testing Reach-Avoid Specifications with Multi-Commodity Flows. Apurva Badithela, Josefine B. Graebener, Wyatt Ubellacker, Eric V. Mazumdar, Aaron D. Ames, Richard M. Murray. Submitted, 2023 International Conference on Robotics and Automation (ICRA).

- Evaluation Metrics for Object Detection for Autonomous Systems. Apurva Badithela, Tichakorn Wongpiromsarn, Richard M. Murray. Submitted, 2023 International Conference on Robotics and Automation (ICRA).

- Towards Better Test Coverage: Merging Unit Tests for Autonomous Systems. Josefine Graebener, Apurva Badithela, Richard M. Murray. 2022 NASA Formal Methods Symposium (NFM).

- Risk-Averse Decision Making Under Uncertainty. Mohamadreza Ahmadi, Ugo Rosolia, Michel D. Ingham, Richard M. Murray, Aaron D. Ames. 2021 AAAI Conference on Artificial Intelligence (AAAI-21).

- Leveraging Classification Metrics for Quantitative System-Level Analysis with Temporal Logic Specifications. Apurva Badithela, Tichakorn Wongpiromsarn, Richard M Murray. To appear, 2021 Conference on Decision and Control.

- Synthesis of Static Test Environments for Observing Sequence-like Behaviors in Autonomous Systems. Apurva Badithela, Richard M. Murray. Submitted, 2021 NASA Formal Methods (NFM).

- Formal Test Synthesis for Safety-Critical Autonomous Systems based on Control Barrier Functions. Prithvi Akella, Mohamadreza Ahmadi, Richard M. Murray, Aaron D. Ames. To appear, 2020 Conference on Decision and Control (CDC).

- Invariant Sets for Integrators and Quadrotor Obstacle Avoidance. Ludvig Doeser, Petter Nilsson, Aaron D. Ames, and Richard M. Murray. 2020 American Control Conference (ACC).

Research supported by the AFOSR Test and Evaluation program, grant FA9550-19-1-0302.

|

|

|