SURF 2013: Experiments with dynamic obstacles and correct-by-construction controllers

2013 SURF project description

- Mentor: Richard Murray

- Co-mentor: Scott C. Livingston

A major challenge in the design of autonomous robots is creating the decision-making logic that controls how a robot reacts to changes in its internal state (e.g., failure of a sensor or actuator) and changes in its environment (e.g., other robots or people interacting with it). This decision-making logic is often encoded as a finite state machine that must satisfy certain safety and reachability properties. While it may be easy to list the desired properties, manually building a finite state machine that has them is difficult. To address this, methods have been proposed that automatically construct a solution from a statement of the properties it should have. However, most such methods assume perfect models and thus may fail when actually implemented on a robot, where uncertainty seems unavoidable.

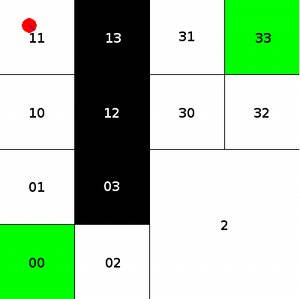

An example of this situation is mobile robot navigation to a goal in the presence of a human ("dynamic obstacle"). While it is difficult to place probabilities on human motion, we can bound how far the human can move in a fixed time period, and possibly find rules for motion in certain situations. These "rules" guide construction of a finite state machine that ensures the robot avoids collisions with people. There is a trade-off in richness of these rules and computation required to find correct strategies against them. There are at least two approaches to cope with this:

1. Use stochastic models and provide probabilistic guarantees of correctness.

2. Patch a given strategy online to account for a changing game while providing exact correctness.

Possible SURF activities

For a summer SURF project, you would design and conduct experiments to compare at least one algorithm from each of these two approaches. Some possible activities are:

- extend current experimental testbed by building "dynamic obstacles"---small vehicles that have random motion;

- propose and demonstrate scenarios to compare performance of several existing algorithms;

- study effects of uncertainty; e.g.,

- How does performance degrade with position uncertainty?

- At what noise level (or flawed actuator performance) do the original guarantees of correctness fail?

- To what extent can old solutions be updated despite uncertainty?

References

- C. Belta, A. Bicchi, M. Egerstedt, E. Frazzoli, E. Klavins, G.J. Pappas, "Symbolic Planning and Control of Robot Motion: Finding the Missing Pieces of Current Methods and Ideas," IEEE Robotics & Automation Magazine, 2007.

- S.C. Livingston, P. Prabhakar, A.B. Jose, R.M. Murray, "Patching task-level robot controllers based on a local µ-calculus formula," Caltech CDS tech report, 2012. http://resolver.caltech.edu/CaltechCDSTR:2012.003

- B. Johnson, F. Havlak, M. Campbell, H. Kress-Gazit, "Execution and Analysis of High-Level Tasks with Dynamic Obstacle Anticipation," Proc. of ICRA 2012.

- For a broad introduction including elementary motion planning, see S.M. LaValle's tutorial at ICRA 2012: "Motion planning for dynamic environments"; http://msl.cs.uiuc.edu/~lavalle/icra12/