SURF 2022: Evaluating Redundancy Between Test Executions for Autonomous Vehicles

2022 SURF: Evaluating Redundancy between Test Cases

- Mentor: Richard Murray

- Co-mentors: Apurva Badithela, Josefine Graebener

Project Description

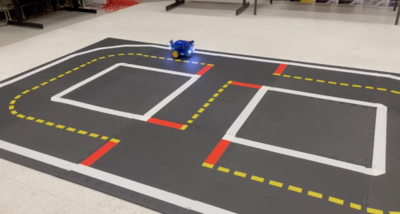

Testing autonomous systems requires defining the test environment, which comprises of test agents, obstacles, and test harnesses on the system under test. Test cases of varying complexity (length of the test, number of test agents and their strategies) could offer the same information on the system's ability to satisfy a requirement. Consider the following example of testing a miniature self-driving car on the Duckietown platform. The autonomous car to be tested has a controller that navigates indefinitely around a loop --- it needs to do lane following, avoid colliding with other cars and take unprotected left turns at intersections after reading appropriate road signs. The figure to the right shows a duckiebot on a simple layout; other duckiebots and mini road signs can be easily augmented to this setup.

- For example, tests can generally be classified into four different categories --- open-loop test in a static environment, open-loop test in a dynamic environment, reactive test in a static environment, and a closed-loop test in a reactive environment.

- For this SURF, we would like to implement a few tests in test environments of varying complexity on the Duckietown hardware. We would then like to characterize the test scenarios for when two tests are redundant and when they are not. We can show this by defining and computing a notion of information gain over the test and show that a more complex test may or may not offer any new insight regarding system performance.