SURF 2022: Evaluating Redundancy Between Test Executions for Autonomous Vehicles

2022 SURF: Evaluating Redundancy between Test Executions

- Mentor: Richard Murray

- Co-mentors: Apurva Badithela, Josefine Graebener

Project Description

For autonomy to be deployed in safety-critical settings, operational testing is imperative. However, principled methods for generating operational tests is still a young but growing research area? Since the autonomous systems are complex and the domain of their operating environments is typically very large, it is not possible to exhaustively check or verify the autonomous systems' behavior. Instead, we need an automated paradigm to select a small number of tests that are the most informative of the system. In this work, we want to formally characterize the notion of redundancy between two test executions.

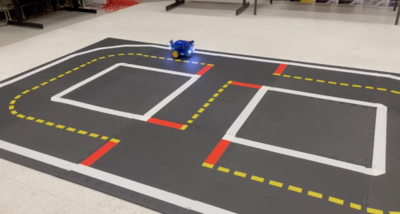

Testing autonomous systems requires defining the test environment, which comprises of test agents, obstacles, and test harnesses on the system under test. Test cases of varying complexity (length of the test, number of test agents and their strategies) could offer the same information on the system's ability to satisfy a requirement. Consider the following example of testing a miniature self-driving car on the Duckietown platform. The autonomous car to be tested has a controller that navigates indefinitely around a loop --- it needs to do lane following, avoid colliding with other cars and take unprotected left turns at intersections after reading appropriate road signs. The figure to the right shows a duckiebot on a simple layout; other duckiebots and mini road signs can be easily augmented to this setup. The duckiebot under test has an off-the-shelf controller implemented on-board for indefinite navigation around the track. In addition to the hardware setup, we have access to a simulator of the hardware setup that could potentially be useful in designing our experiments.

For example, tests can generally be classified into four different categories:

- Open-loop test in a static environment -- The test parameters are defined prior to the start of the test and do not depend on the real-time state of the autonomous system during the test. The test environment has no continuous dynamics. An example of this type of test would be to setup the track to have obstacles that block the autonomous duckiebot's path and have the duckiebot start with some pre-defined initial pose and velocity.

- Open-loop test in a dynamic environment -- The test strategies of the test environment agents do not depend on the real-time state of the system under test. The test environment has agents with continuous dynamics. For example, in this test paradigm, we could have test environment duckiebots with predefined instructions on how to navigate around the track, and this pre-programmed logic on the test environment duckiebots does not change irrespective of the behavior of the duckiebot under test.

- Reactive test in a static environment -- The test parameters depend on the real-time state of the system under test. The test environment does not have agents with continuous dynamics. For example, obstacles can be placed on the track but instructions on where the duckiebot under test should navigate to could be generated in real-time and depends on the trajectory of the duckiebot under test.

- Reactive test in a dynamic environment -- The test parameters depend on the real-time state of the system under test, and the test strategies agents in the environment are reactive to the state of the system. Considering the running example of testing a duckiebot on the track, this type of test would comprise of test environment duckiebots with strategies that react to the duckiebot under test. A concrete example of this would be a test duckiebot slowing down to match the time at which the test duckiebot and the duckiebot under test reach an intersection. This would prompt the duckiebot under test to account for the test duckiebot before proceeding through the intersection, and this would be more useful test than having the duckiebot driving through an empty intersection.

For this SURF, we would like to implement a few tests in test environments of varying complexity (as described above) on the Duckietown hardware. We would then like to characterize the test scenarios for when two tests are redundant and when they are not. For instance, is there open-loop test executions in a dynamic environment that can be replaced by an equivalent open-loop test in a static environment? Likewise, we would also like to reason about redundancy in the following test paradigms -- open-loop test in a dynamic environment vs. reactive test in a dynamic environment and reactive test in a static environment vs. reactive test in a dynamic environment. We can start by defining and computing a notion of information gain over the test execution and show that a more complex test may or may not offer any new insight regarding system performance. If we can prove, or find a counterexample that disproves, redundancy between test executions from different test environment paradigms, we would like to implement the test executions on Duckietown to demonstrate this. in addition to the Duckietown hardware, we have access to the Duckietown simulator that will be a useful tool for this study.

Prerequisites

- Experience coding in Python

- Willing to learn development on Docker and Github

- Distributed Computing and Formal Methods (CS 142), Robotics (ME 133abc, ME 134)

What you can expect from this SURF

- Work closely with graduate students on test case generation for autonomy

- Coming up with theoretical insights (ex: Proving results on which class of systems and test paradigms are equivalent)

- Writing open-source code to implement algorithms to demonstrating these ideas

References

1. Duckietown. https://docs.duckietown.org/daffy/duckietown-robotics-development/out/index.html