SURF 2020: Test and Evaluation for Autonomy

Autonomous systems are an emerging technology with potential for growth and impact in safety-critical applications such as self-driving cars, space missions, distributed power grid. In these applications, a rigorous, proof-based framework for design, test and evaluation of autonomy is necessary.

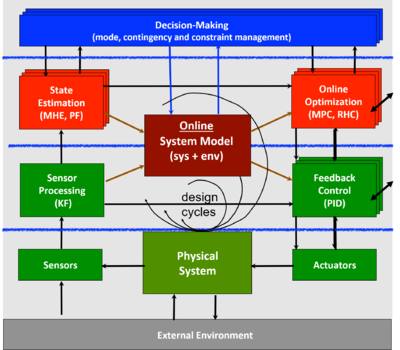

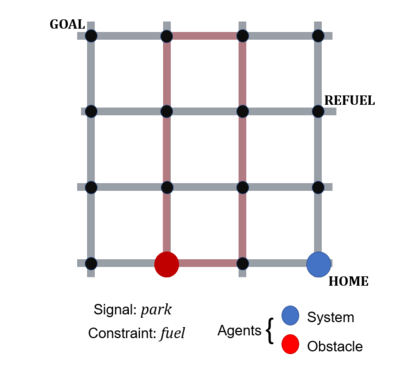

The architecture of autonomous systems can be represented in a hierarchy of levels (see figure below) with a discrete decision-making layer at the top and low-level controllers at the bottom. In this project, we will be focusing on testing at the top layer, that is, testing for discrete event systems. The requirements of the system under consideration can be represented as specifications in a formal language. Most commonly in theory, specifications are written in temporal logic languages such as Linear Temporal Logic (LTL), Signal Temporal Logic (STL), Computational Tree Logic (CTL), etc (See references 2 and 3 for an overview on writing specifications in temporal logic). In addition to the system and its specifications, we have an environment for which corresponding specifications can be written. The environment constitutes of other agents modeled in the problem statement that do not constitute the system. See Figure 2 below for an example scenario of the system / environment.

If the system and environment specifications are given as GR(1) LTL specifications, it is possible to synthesize correct-by-construction controllers using TuliP (Reference 5). More background on GR(1) formalism can be found in Reference 4. In this work, we use GR(1) specifications to describe system and environment requirements.

In testing, we check if the system has satisfied its specifications for a finite number of possible combinations of initial conditions, model parameters and environment actions. From test data, we can then evaluate whether the system has passed the test. The challenge is how do we algorithmically generate the "best" (finite) set of tests for an autonomous system given the specifications of the system and the environment? To this end, we would like the SURF student to develop a Test Plan Monitor for discrete decision-making behaviors.

1)How do we leverage test data to design the next set of tests? Specifically, for each trace of system actions, can we generate a set of environment traces to form the next round of tests?

2)Use test data to identify if the fault was caused because of information not captured in the discrete system model.

It would be useful for the SURF student to know MATLAB and Python.

References:

1. Bartocci, Ezio, et al. "Specification-based monitoring of cyber-physical systems: a survey on theory, tools and applications." Lectures on Runtime Verification. Springer, Cham, 2018. 135-175. Link

2. RM Murray, U. Topcu, N. Wongpiromsarn. HYCON-EECI, 2013 Link

3. On Signal Temporal Logic. A. Donze, 2014 Link

4. RM Murray, U. Topcu, N. Wongpiromsarn. HYCON-EECI, 2013 Link

5. Wongpiromsarn, Tichakorn, et al. "TuLiP: a software toolbox for receding horizon temporal logic planning." Proceedings of the 14th international conference on Hybrid systems: computation and control. ACM, 2011. Link